OpenAI has been busy in the last year. It has been the talk of the internet for its two big projects: Dall-E 2 and ChatGPT. Between these two massive artificial intelligence platforms, the company helped generate images and long reams of texts from nothing more than a worded prompt.

Now, the company is already back with a third concept, dropping it in just before Christmas to pique everybody’s interest. This third one, now named Point-E, follows a similar pattern, creating content from just your prompts.

What is Point-E and how does it work?

In many ways, Point-E is a successor to Dall-E 2, even following the same naming convention. Where Dall-E was used to create images from scratch, Point-E is taking things one step further, turning those images into 3D models.

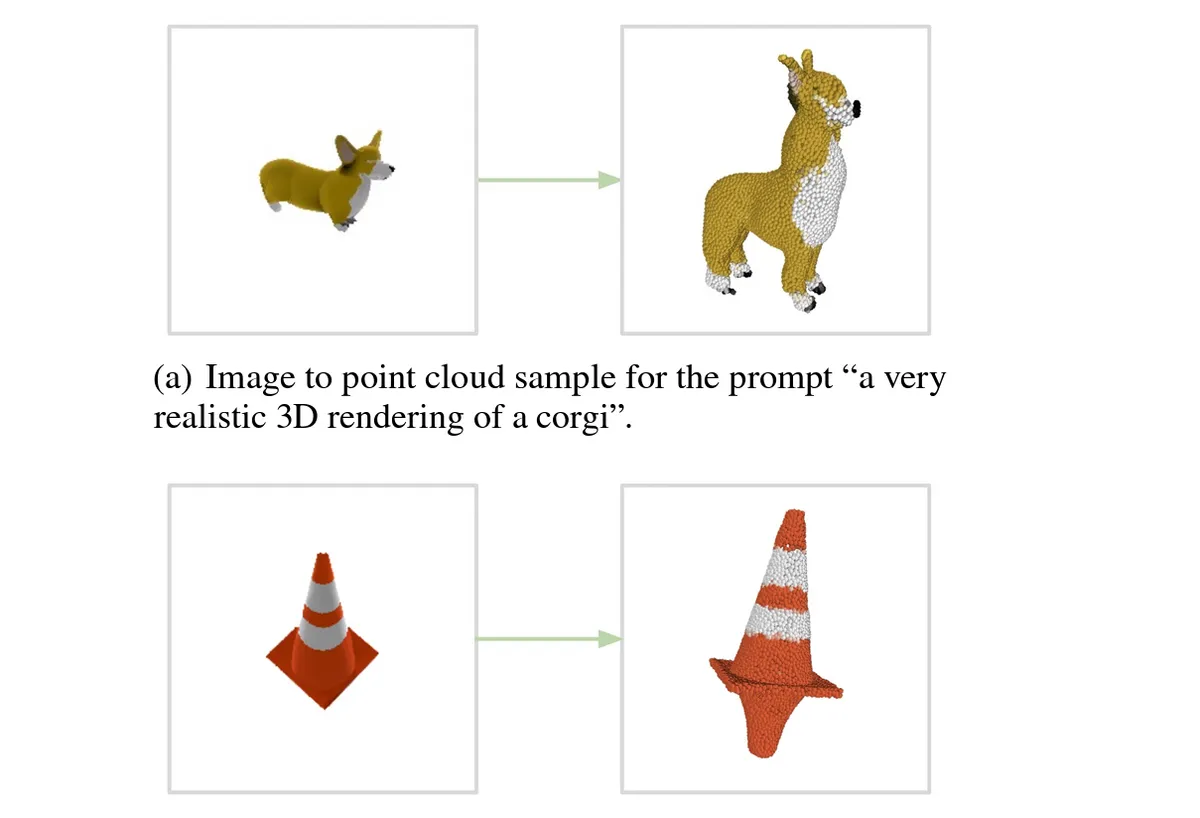

Announced in a research paper published by the OpenAI team, Point-E works in two parts: first by using a text-to-image AI to convert your worded prompt into an image, then using a second function to turn that image into a 3D model.

Where Dall-E 2 works to create the highest quality image possible, Point-E creates a much lower quality image, simply needing enough to form a 3D model.

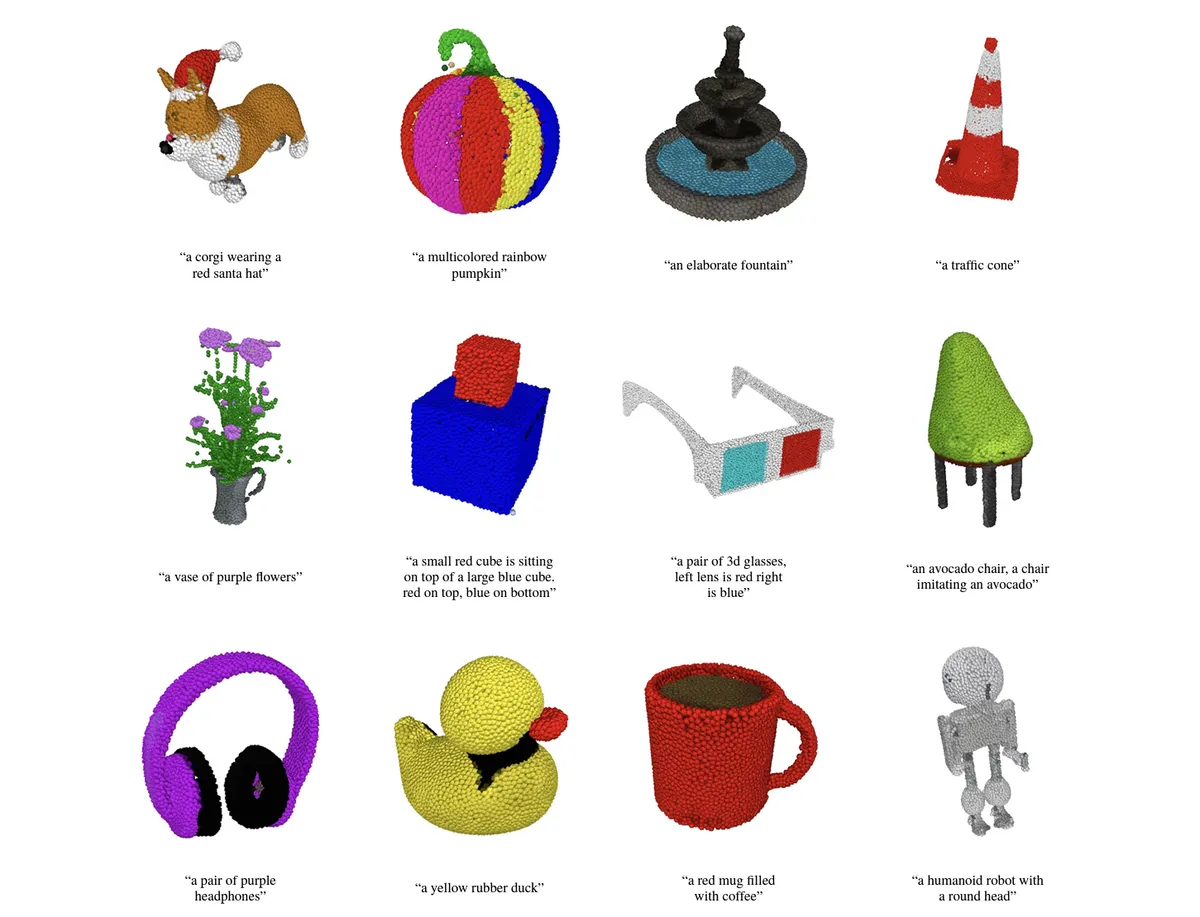

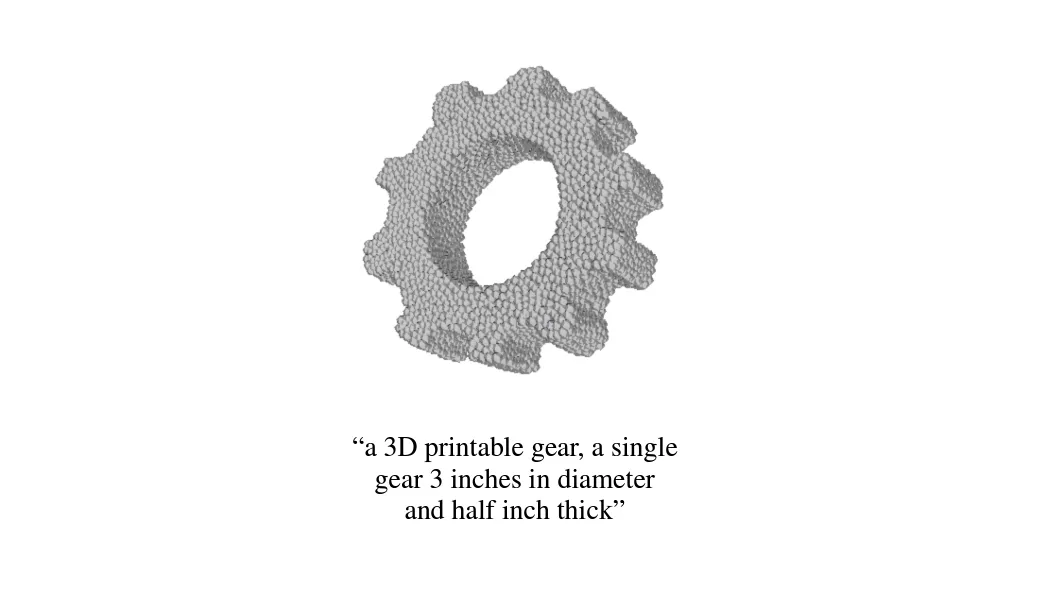

Unlike a traditional 3D model, Point-E isn’t actually generating an entire fluid structure. Instead, it is generating a point cloud (hence the name). This simply means a number of points dotted around a space that represent a 3D shape.

That obviously wouldn’t look like a whole lot, which is why the model has a second step. The team trained an additional AI model to convert the points to meshes. This is something that better resembles the shapes, moulds, and edges of an object.

However, when you’re dealing with this many factors, things aren’t always going to be perfect. As OpenAI has noted in the research paper, objects can be missing points or result in blocky objects.

Training the model

To get the model functioning, the team had to train it. The first half of the process, the text-to-image section, was trained on worded prompts, just like Dall-E 2 before. This meant images that were accompanied by alt-text to help the model understand what was in the image.

The image-to-3D model then had to be trained in a similar way. This was given similar training, offered a set of images that were paired with 3D models so Point-E could understand the relationship between the two.

This training was repeated millions of times, using a huge number of data sets. In its first tests of the model, Point-E was able to reproduce coloured rough estimates of the requests through point clouds, but they were still a long way from being accurate representations.

This technology is still in its earliest stages, and it will likely be a while longer until we see Point-E making accurate 3D renders, and even longer until the public will be interacting with it like Dall-E 2 or ChatGPT.

How to use Point-E

While Point-E hasn't been launched in its official form through OpenAI, it is available via Github for those more technically minded. Alternatively, you can test the technology through Hugging Face - a machine learning community that has previously hosted other big artificial intelligence programs.

Right now, the technology is in its infant stage and therefore isn't going to produce the most accurate responses, but it gives an idea of the future of the technology. Expect the occasional long wait or long load time as we would imagine lots of people will be trying the technology via Hugging Face.

It isn't clear whether OpenAI will offer the service to the public when they launch it, or if it will be an invite-only occasion at first.

The application of Point-E

With most modern artificial intelligence programs, the question quickly arises of what they are designed for. With both ChatGPT and Dall-E 2, there are rising concerns around these platforms replacing artists and creatives.

The same concerns will likely appear for Point-E. 3D design is a huge industry and, while Point-E isn’t able to accurately match the work of a 3D artist right now, it could rival this field in the future.

However, with reports of OpenAI spending well into the millions each month to keep these projects going, this kind of software will likely be costly to use and run, especially for something as complicated as 3D rendering.

Read more: