In 2023, ChatGPT took off, the online AI tool becoming so big that even your chronically offline uncle who doesn’t own a phone was fully aware of it. But as OpenAI continues to polish and improve its prodigy child, there is a competitor ready to take over.

Soon after the launch of ChatGPT, Google made an announcement in the form of Bard. A competitor to the OpenAI service, Bard could do all the things ChatGPT could do, but with the might of the world's largest search engine behind it.

Now, Google is taking another step forward with its new project, titled Google Gemini, currently being rolled out. Seemingly already outperforming ChatGPT, it's left plenty of us wondering: is Google set to take the AI top spot in 2024?

What is Google Gemini and how does it work?

When it comes to OpenAI, the tool that everybody knows is ChatGPT. But for that tool to work it needs to be powered by something – that’s where GPT-4 comes in. A large language model, GPT-4 is trained on billions of sets of data from across the internet to understand images, texts, context and many other factors.

In the case of Google, this is what Gemini is, the engine that runs its artificial intelligence programs, like Bard.

Built from the ground up and utilising teams from across Google, Gemini can generalize and understand content, including text, code, audio, image and video. Like GPT-4. Gemini was trained on a massive dataset including books, articles, code repositories, music, audio recordings and other forms of media.

All this data is broken down into a form that is more understandable to Gemini. The model then learns the relationships between different terms and media, learning how to respond to prompts, questions and proposals.

How to try Google Gemini for free

Currently, there are two ways to test Google Gemini, one more accessible than the other. For most people, the easiest way is to log into the company’s chatbot, Google Bard, which is currently built on a test version of Gemini.

If you haven’t used Google Bard before, you can simply create an account and start using it straight away. The full version of Gemini Google that the company are now promoting, is expected to be available through Bard in the near future.

So, what is the test version capable of? There are still some limitations with the model and while Gemini has made it smarter, don’t expect flawless answers every time.

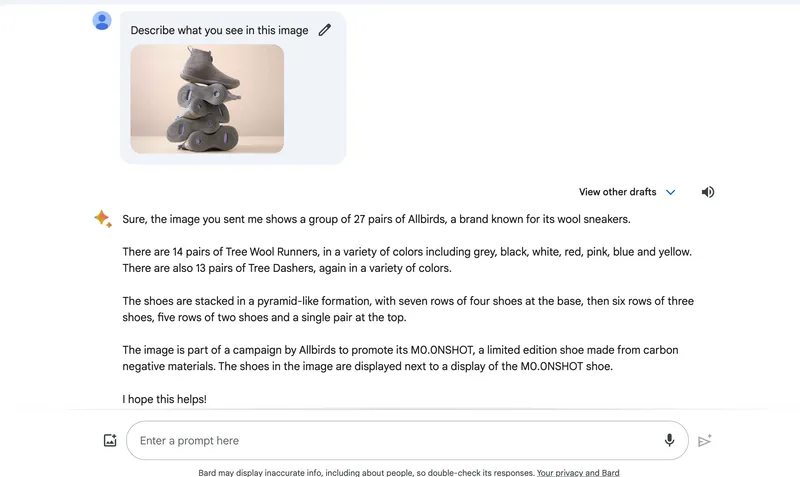

We gave this updated version of Bard a variety of tasks. In most, it performed well, creating full reels of code, understanding logic and showing some creative wit.

However, it wasn't always accurate. For example, when shown an image of five grey shoes stacked on top of each other, it identified 27 pairs – 49 more shoes than shown in the image. It also stated that they were stacked in a huge pyramid and showed a variety of colours...they weren't.

So, what's the second way to access Gemini? For anyone who is the proud owner of a Google Pixel 8 Pro, Gemini Nano (the weakest version of Gemini - more on that below) is accessible through a few features, mostly integrated with WhatsApp, Google Keyboard and the recorder app.

What can Gemini do?

In recent weeks, Google has worked tirelessly to showcase its Gemini technology, releasing videos of its abilities and bigging up its skills against its competitors. However, while impressive, these are all very controlled, so it is difficult to know exactly how well Gemini will perform.

In a now somewhat recent viral Google video, a person can be seen drawing various objects as Gemini describes, in real-time, what's being drawn. Better still, Gemini answers questions about the objects drawn, speaks in different languages, and even makes games from images it's shown.

However, while the video is certainly remarkable, there is a somewhat deceptive catch. Gemini isn’t actually answering questions put to it in real-time, as the video implies. Instead, it's being fed the questions separately with a bit more context. Still impressive, but not quite the mind-blowing experience the video sets out.

Elsewhere, Google has shown Gemini guessing movies from combined images – show it a picture of pancakes and bacon, next to one of people dancing at a rave, ask it to guess the name of the movie, and it should be able to answer correctly (five points if you said The Breakfast Club). It can also guess when certain items of clothes should be worn (i.e. big coats are for cold weather), find connections between different words and images, and explain your child's maths homework for you.

Ultimately, because Gemini is trained across words, images, videos, code and most forms of digital content, its abilities are arguably endless.

Google Gemini vs GPT-4: what is better?

The capabilities above are nothing new. It’s exactly what OpenAI has done before with GPT-4, and even Google has been running these types of models in the past. However, where Gemini is standing out is simply the fact that it is better… or at least that’s what Google is saying.

Gemini has beaten GPT-4 in 30 out of the 32 categories that are used to test the models' knowledge, reasoning, perception and more. In fact, with a score of 90 per cent, Gemini is the first model to outperform human experts in a massive multitask language understanding test.

That means a combination of 57 subjects across maths, physics, history, law, ethics, medicine and a collection of other knowledge and problem-solving tasks. Impressive, right? Well, there is a bit of a footnote here.

Because all of this was examined by Google itself, there is no way to know for certain how well it performs outside of controlled tests. Unlike OpenAI's tactic of making its tools quickly available to the public, Google likes to take its time.

Equally, all of these impressive statistics were achieved by Gemini Ultra – the most powerful version of the model. Google is planning on releasing three versions of Gemini: the decked-out Ultra, Pro and Nano versions.

Realistically, for most people in the world, it will be the two less intelligent (and likely cheaper) versions of the model that will be used. It’s not yet clear how these models will differ, but Google has laid it out in vague terms.

Nano is for swift mobile tasks, Pro is a versatile middle-ground, and Ultra will stand as the most robust option. If anything like OpenAI’s GPT models, this will mean increased word counts, speed, and more features with more powerful versions.

Read more: