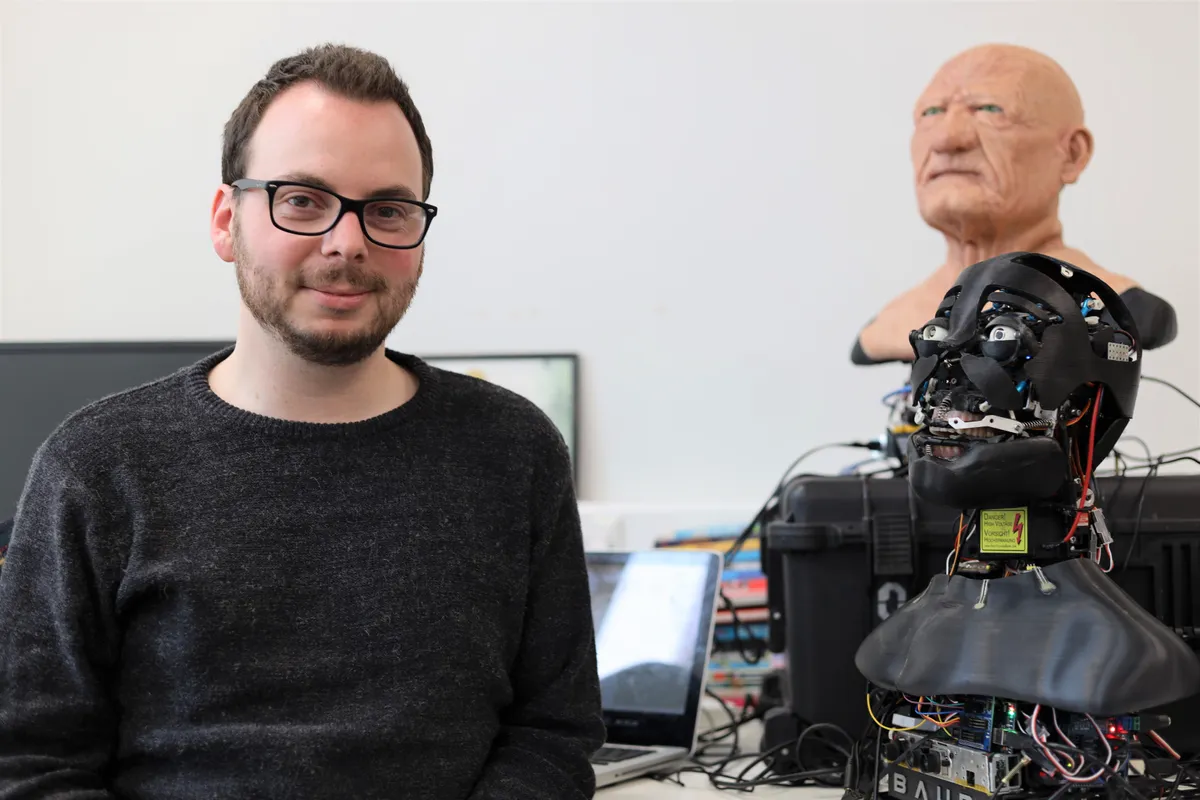

Dr Carl Strathearn, a research fellow at the School of Computing at Edinburgh Napier University talks to BBC Science Focus commissioning editor Jason Goodyer about his research on realistic humanoid robots.

What is the uncanny valley?

The uncanny valley is a point where things like humanoid robots and CGI characters start to give us an eerie feeling. And the reason for that is because they’re not perfect representations of humans – they never quite get there. So, they emit these feelings of terror, unease and unfriendliness.

From birth we’re able to detect and analyse faces. And faces play such an important part in our communication. When we start to see things that shouldn’t be there, things that are out of place, we do get that feeling of repulsion. It’s not just appearance, though, it’s in functionality as well. The way robots move, say. If a robot doesn’t move the way we expect it to move, that again gives that feeling of unnaturalness and uneasiness.

Your work focuses on matching facial movements to speech. Why does that play such an important role in this?

The two key areas in the uncanny valley theorem are the eyes and the mouth. When we communicate, our attention goes between the eyes and the mouth. We look at the eyes to get attention and we look at the mouth for speech reading, for understanding. And with robots particularly, anything that’s outside the realm of natural lip movements, can be confusing and disorienting for us. Especially if

you’re interacting over a certain amount of time.

How did the project start?

When I was first doing this project, I was actually helping teach in the animation department because the previous university I was at didn’t quite have a robotics department. So that’s where these ideas started to come together. They use programs like one called Oculus, which basically takes speech and converts into a CGI mouth with lip positions.

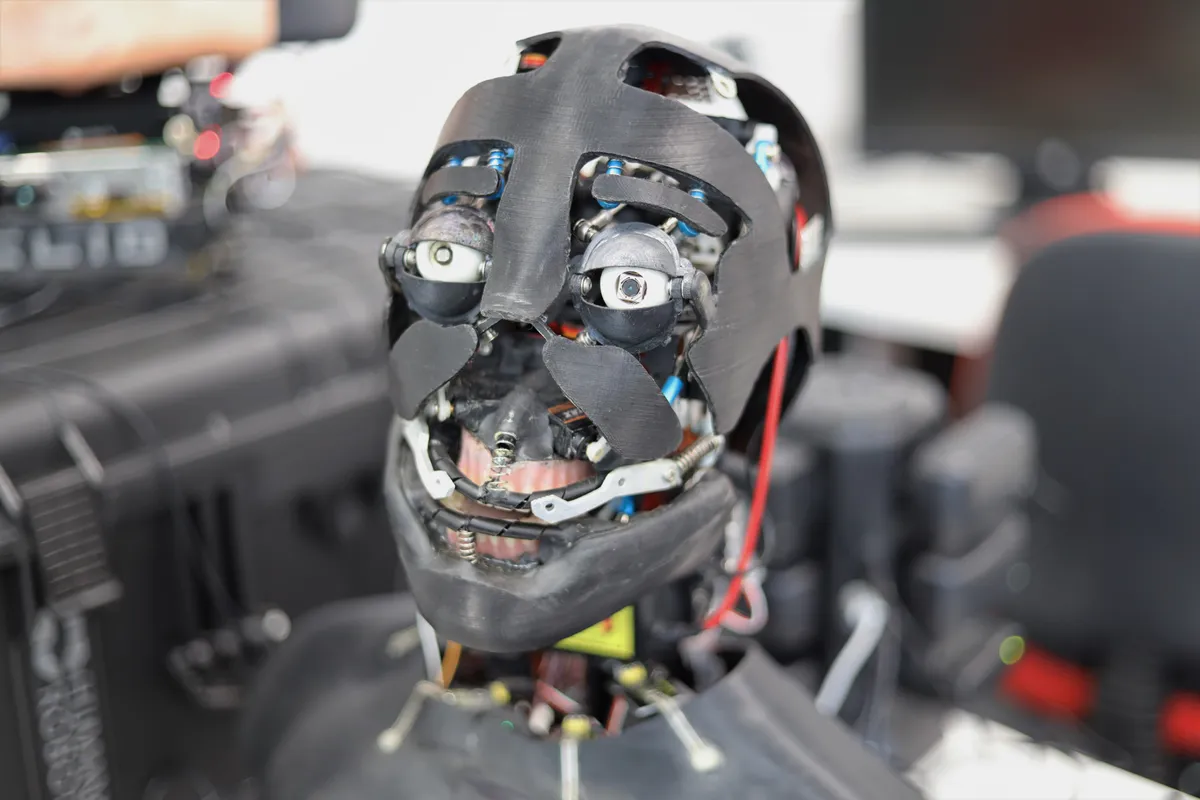

So, it automatically reads speech and extracts the visemes [a lip shape used to form a particular sound] for the mouth positions and I wanted to do that with the robot. So, I created a robot mouth modelled on the human mouth.

But before I did that, I looked at previous robotic mouth systems to see what was missing. And that was really important just to be able to see what the key muscles were, what muscles work together, and what can be left out of this mouth.

Obviously, it’s a very small area and you’re confined to what you could actually put into a robotic mouth. One of the key things I found that was missing was something called the buccinator muscles, which are the muscles at the corners of the mouth – not the cheek muscles, they’re used for pursing and stretching the lips when we create vowel and consonant sounds. So, I replicated these muscles and I created a robotic mouth prototype.

Read more about robots:

- Where does the word ‘robot’ come from?

- What if robots took our jobs?

- If we’re ever able to make robots as intelligent as us, won’t forcing them to work for us be as bad as slavery?

- Why do we make robots look like humans?

Where does the software part come in?

I thought, ‘Right, the next stage is to create an application that can take these lip shapes and put them into this robotic mouth.’ So, we used something called a viseme chart. It’s something that’s used a lot for CGI in game design – basically it’s a list of sounds and the matching mouth shape – and I made my robot make these shapes. For each sound – the Ahs, Rs, and Oos – I had all these robotic mouth positions. And I collected and saved them into a configuration file so I’d be able to bring them out later and use them.

The next part was creating a system that could handle speech [not just pure simple sounds]. But I wanted to do it live, so there was no room for processing time, because if you use processing time, then the speech becomes unnatural as there are lots of huge pauses in the conversation. So, I created a machine learning algorithm to take speech synthesis, which is robotic speech like you have on Siri, out of the laptop and into a microprocessor that turned that audio data back into numerical data. Part of it also went into a processing system so I could actually see the sound wave like you see in a recording studio.

Can you tell me a bit more about how the system works?

I created a machine learning algorithm that could recognise patterns in the incoming speech. That was done not by monitoring the speech itself as such, but the patterns in the waveform. So, you’re looking at the pixel size, the length of each word and each sound, and then feeding the system a bunch of samples.

That way it kind of knew what it was looking for. And when it came across [a sound it was familiar with], it was able to transfigure the robot mouth system to match to the positions that I matched on the chart. That worked surprisingly well.

The next thing was what I call the voice patterning system, which works with syllables. Obviously, when you talk your jaw moves up and down in time with syllables. So, that was the next stage to create this patterning system, which meant if there was no sound, the mouth was shut, and the louder the sound, the wider the mouth.

How did you go about choosing the robot’s appearance?

Well, there are actually two robots in the experiment – an older-looking one and a younger-looking one. The younger robot doesn’t get as much attention because I think the older robot looks more realistic. But I produced them with the idea of one being a younger version of the other. So, you have kind of the same robot.

I wanted to compare how people interacted with an older-looking robot and a younger-looking robot. What I found is that young people preferred to interact with the younger robot and older people preferred to interact with the other older-looking robot.

I also gave them personalities. I thought, well, I’m quite young, so I’ll base the younger personality on myself. And I know my dad pretty well, he’s kind of old, so I modelled the older one on him. I had the younger robot be interested in what I’m interested in and the older one be interested in snooker and John Smiths.

So, what are the potential applications of this type of work?

I always use Data from Star Trek as the perfect example for this, because he acts like this very humanistic interface between lots of different things: people and aliens – obviously aliens that don’t speak English so he acts as a translator. But he also acts as the interface between the ship’s computer and people.

So, things that would be very difficult for humans, say calculations, he’s able to translate that information and give it in a simplified way – a humanistic way, with emotion, with facial expressions. And that’s what I think this technology will eventually head towards.

We have to remember that not everybody can interact with technology effectively. We’re very privileged, I think, to have grown up with technology and to be able to use it. But there are lots of people in the world who don’t have that, so creating something like a humanoid robot would allow them to integrate with technology a lot more naturally.