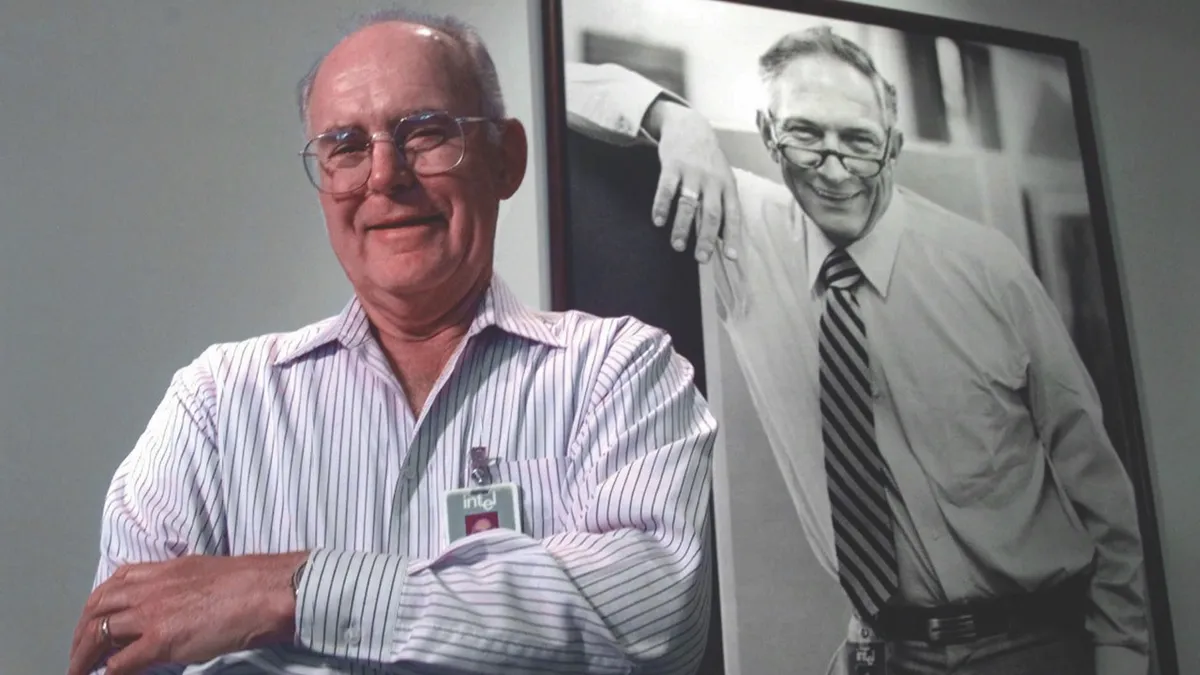

Compared to those of 50 years ago, the computer processors of today are fast. Crazily fast. Their speed has been doubling approximately every two years. This doubling effect is known as Moore’s Law, after Gordon Moore, the co-founder of Intel, who predicted this rate of progress back in 1965. If the top speed of cars had followed the same trend since 1965, we would be watching Lewis Hamilton fly around Silverstone at more than 11,000,000,000mph.

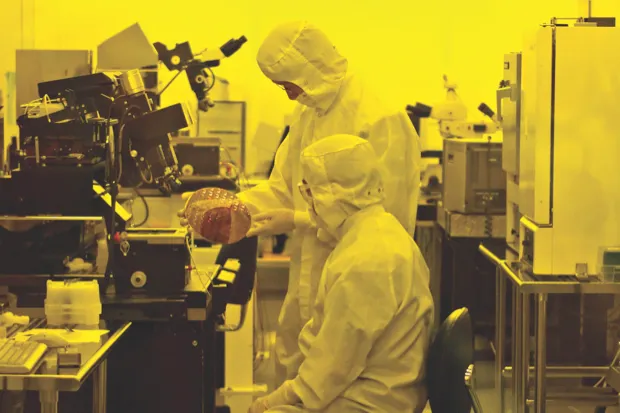

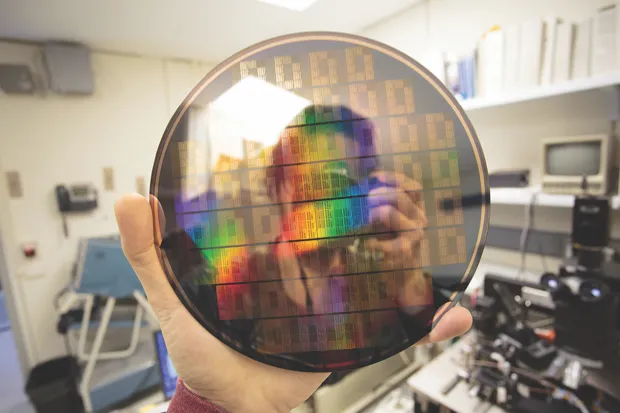

For the computer industry, his prediction became a golden rule, perhaps even a self-fulfilling prophecy. The chip manufacturers were inspired to attain the performance Moore’s Law forecast. And so they did, inventing ever more impressive ways to shrink the necessary components to fit into smaller and smaller areas of silicon, and speed up the rate at which those components interacted in the process.

Today, thanks to the large-scale integrated circuits used to make the increasingly powerful microprocessors, the computer industry has transformed the world. We have digitised almost every aspect of our lives, from food distribution to transport, and created new technologies that would never have been possible with older processors, such as social media, online gaming, robotics,augmented reality and machine learning.

The continuing advances predicted by Moore’s Law has made these transformations possible. But we’ve become blasé about the extraordinary progress, to the point where many software companies simply assume that it will continue. But as we create more data every day, we also create the need for vast warehouses of computers, known as the cloud, to store and process that data. And the more data we produce, the more computing power we need to analyse it.

But the story of runaway progress in silicon can’t continue forever. We’re now reaching the end of this amazing technological explosion and we’re running out of ways to make our computers faster. Despite the remarkable efforts of research engineers, you can only make transistors so small before you run out of room at the bottom. For example, Apple’s A11 chip, one of the best we have today, contains 4.3 billion transistors in an area of 87.66mm-squared. Go much smaller than this and the transistors become so tiny that the effects of quantum physics start to interfere – electrons start to jump around and turn up in places where you don’t want them to be. With so little space, it also becomes difficult to organise the fine structure of the silicon wafer that’s essential to control its electrical properties. Pack in too many transistors and make them work faster and the restricted flow of electrons within the chip can make it so hot that without significant cooling, it will burn itself up.

New innovations

Chip manufacturers have known about these problems for decades, and have been doing their best to work around them. We used to see microprocessors increase their clock speed (the base operating speed of a computer) every year, to make them compute more quickly.

We saw the 25MHz i486 in 1991, the 200MHz Pentium Pro in 1998 and the 3.8GHz Pentium 4 in 2008. But that was about as fast as we could make them tick without them becoming impossible to cool. Since then, manufacturers have had to use multiple cores so processors can do their work in parallel in order to make them work faster – first double-core, then quad-core, 8-core, 16-core and so on.

Today, it has become so difficult and expensive to continue to match the pace of progress predicted by Moore’s Law that almost all chip manufacturers have abandoned the race. It’s no longer cost-effective to continue in this direction and as a result, there’s been a significant decline in research and development labs working at the cutting-edge of new chip-manufacturing processes. The age of Moore’s Law is nearly over.

Instead, the major manufacturers now focus their efforts on specialised chips that are designed to accelerate specific types of computation. The most common examples of this are the graphics processors. They were originally created to perform many similar calculations in parallel in order to enable the blindingly fast graphics needed for computer games. They have now evolved into general purpose processors, which are used for data analysis and machine learning.

Other companies have introduced their own application-specific integrated circuits (ASICs), such as Google’s Tensor Processing Unit. This is a circuit arranged as pods of 256 chips all working in parallel and developed specifically to run machine learning software at industry-leading speeds that Google recently began beta testing.

The end of Moore’s Law should not be seen as the end of progress. Far from it. We now find ourselves in a new era of innovation, where new computer architectures and technologies are being explored seriously for the first time in decades. These new technologies show that while the future of computing may no longer be increasing at an exponential rate, progress is continuing at quite a pace. While this will likely result in faster conventional computation, it may also give us the ability to process information in an entirely new way.

Here are some of the technologies that could revolutionise the world of computing when Moore’s Law takes its curtain call.

Memristors

The memristor began life as a hypothetical electronic component, envisioned by a circuit theorist in the early 1970s. The idea was that this component would remember the electrical current that had flowed through it and its resistance would vary according to that history. It was intended to be a fundamental circuit component, just as important as the transistor or capacitor.

When organised in the right way, memristors can replace transistors altogether. As they can be packed in higher densities compared to transistors, they enable faster processors or higher capacity memories. Some regard memristors as the ideal component for modelling neural networks and performing machine learning. Yet despite the theory, it has proven difficult to make memristors. The first commercial ones were released in 2017, though there is some debate over whether they’re the same as the hypothesised one.

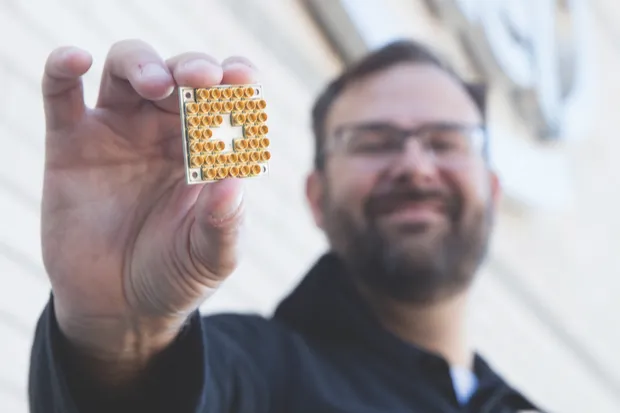

Quantum computing

Perhaps the most familiar of all contenders looking to supersede conventional silicon chips is quantum computing. Instead of letting quantum effects prevent our tiny transistors from working, why not build devices that take advantage of the tiny quantum effects to make them work?

Rather that the bits (the basic unit of information used in conventional computing) quantum computing relies on building blocks named qubits. Whereas regular bits can only take a value of either0 or 1, think of the north and south poleon a sphere, a qubit can take any valueon the whole surface of the sphere. This allows them to process more information with less energy.

Today there are several early examples of quantum computers that each follow slightly different designs developed by IBM, Google, Rigetti and Intel. So far, it remains to be seen which company and which type of quantum computer will win the race to dominate this exotic new form of computing but initial results certainly look promising.

Graphene processors

There are lots of emerging exotic materials that could potentially be put to use in electronics. Graphene – the amazing new material made from a lattice of carbon molecules and is 40 times stronger than diamond– is one such contender because it’s a remarkable conductor of electricity.

Recent research by US universities has used graphene to make a transistor that works 1,000 times faster than its silicon cousin. With less electrical resistance, the speed of graphene processors could be increased a thousand-fold and still use less power than conventional technology.

Living chips

Many researchers are working on building computers that take inspiration from neurons in the brain. The Human Brain Project, for example, is a massive EU-funded project that’s investigating how to build new algorithms and computers that mimic the way the brain works. But some researchers are going even further.

Koniku is the first company dedicated to building computers using living neurons. “We take the radical view that you can actually compute with real, biological neurons,” says founder Oshiorenoya Agabi. Koniku aims to grow living neurons, programmed by altering the DNA, and keep them alive and functional for up to two years in a ‘living chip’. The result may be a biological processor that Koniku says could be used to detect the odours of drugs or explosives for security and military purposes.

This is an extract from issue 321 of BBC Focus magazine.

Subscribe and get the full article delivered to your door, or download the BBC Focus app to read it on your smartphone or tablet. Find out more

[This article was first published in May 2018]

Follow Science Focus onTwitter,Facebook, Instagramand Flipboard